I have now officially made my Spotify debut: Snack Overflow: 97. Är mikrotjänster fortfarande bättre än skivat bröd? Eller ska vi tillbaka till monoliten?.

Snack Overflow is a podcast created by some of my colleagues at Avega. It’s made by developers, with a developer or tech interested audience in mind. Episodes average about 50 min in length, and are hosted by one or more of the creators, sometimes joined by a guest speaker. The participants are all experienced web-developers, and at the time of writing this, all based in Sweden. In the above episode, I make my debut as the guest speaker, and we talk about microservices, why they came about in favor of monoliths, and whether we are better off today.

Just for the record, the episode is not rehearsed or meticulously planned. We basically get a topic and some broad questions to try to cover before jumping into a video-call. Everything is recorded in one take, meaning we will get some hesitation, filler words or even inaccuracies in the final edit. Nonetheless, these are some key takeaways from the episode.

Conway’s Law

Paraphrasing from memory, Conway’s Law is the axiom that organizations will develop software architectures that reflect the structure of the organization. I have observed this in more or less all projects I have worked on. A recurring scenario I’ve found myself in is being in a team that needs to implement (and own) a solution, that in theory should be extremely trivial - think, service A sends data to service B - but not being able to do so, because multiple teams, which are assigned certain responsibilities need to be involed. So A -> B instead becomes A -> X -> Y -> B and we’ve introduced:

- two single points of failure

- two synchronous dependencies

- two points of additional latency

- requirements from

YthatBneed to comply with - blockers (the rate of change will at worst be capped at the rate of the slowest team) and

- the need for multiple teams to talk to each other (or in the worst case, the need for one team to talk to all teams, because the teams don’t talk to each other)

I bring Conway’s Law up a lot, as it’s one of the core considerations one ought to take early in a software architecture (or better yet, organization structuring) journey - probably more so when building a microservice architecture. If the organization is structured arbitrarily (or based on some consideration other than what makes the most sense from a software engineering perspective), the software will be influenced accordingly. And this is in the best case bad for the developer experience, in the worst case extremely wasteful for the whole organization (and in turn the planet!).

For further reading on Conway’s Law, Casey Muratori has a great video - The Only Unbreakable Law that I can’t recommend enough.

Event-driven Architecture

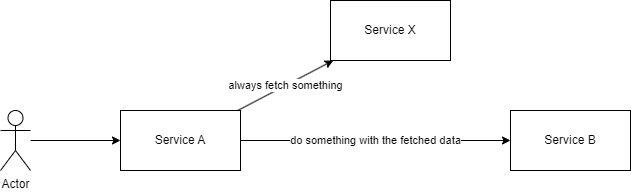

Let’s look at what event-driven architecture is, using an example. Consider this rough architecture proposal with little context or detail. Why build this:

when you can build the following:

Let’s have a closer look at the diagrams. We have option 1, where a user interacts with Service A (A), which is dependent on Service X (X) and Service B (B). Every single time the user makes an API call to A, A will make an API call to X, before making an API call to B. If X is down, it doesn’t work. If Service X is slow, the time between the user taking the action and it being reflected in B will be longer (e.g. the user experience will be degraded).

Now let’s look at option 2. A user interacts with A, which checks its database and makes an API call to B. A will never make an API call to X. If X is down, A will still work. If X is slow, A (and in turn the user) will not be affected, since it’s not making API calls to it. And this is not mentioning the difference in latency between making an API call (which will result in a network hop, the API layer authentication, followed by a process making a DB lookup), and just making the DB lookup (which at worst would be as quick as the DB lookup in the other option, and at best be a read from memory in the same process that needs the data).

So, assuming we’re not being stopped by some non-technical constraint (like a policy), where it would be necessary to always fetch the data from X, option 2 looks like the superior option.

Now consider that A, B and X are separate teams. In fact, X is not part of the same organization as A and B. We have even less control over the user experience in option 1 than we thought. X can kill A. And who are the users going to come complaining to? You guessed it: A. Oh and did we consider the scenario where A explodes in popularity and grows to have orders of magnitude more requests per time period? A might even take down X with the increase in traffic. X might have a cap on connections or requests that we didn’t know about until the peak of our service’s popularity - the worst possible time to discover it. X might be able to fix the problem with scaling or quoata changes, but remember that X is a team, not part of our organization? We don’t know how quickly X will be able to even implement a fix.

In short - event-driven architecture might be one of the better tools we have to decouple services (and because services reflect organizations - it is also a tool to decouple teams). I bring the architecture paradigm up in the podcast as an example of the right way of implementing microservices. I also mention that I’ve very rarely seen it used to its full potential within an organization - and therefore I can’t say that I know of any examples where an organization has done microservices “the right way”. They more often land in some hybrid solution using decoupled pieces of code with syncronous connections to a monolith or directly to the same database - making a distributed monolith.

Visualizing your Entire Architecture

Lastly, one topic that we discussed in the podcast was the general need for some sort of visualization of the high-level architecture. I specifically talked about the need for creating some sort of diagram of relevant architecture before you commit to making changes in a system. However, regardless of what stage of the system’s lifecycle you are in, this diagram would be really nice to have. The holy grail would be to generate one automatically.

Now I’ve worked on solutions using Backstage, AWS X-Ray, sentry, even implemented somewhat complicated scripts that scan entire git servers and tries to extract the relevant metadata, but never quite reached the full 100% visualization. Especially working with microservices. Two services could be using different programming languages - two codebases could implement their http clients differently - the list of challenges goes on.

Conclusion

Given the very low barrier of entry for me for participating, it’s likely that this wasn’t my last guest appearance on a tech podcast. It’s also fun to be part of something that reaches a public audience. So until next time, I’ll make sure to figure out how to make my audio crisper.